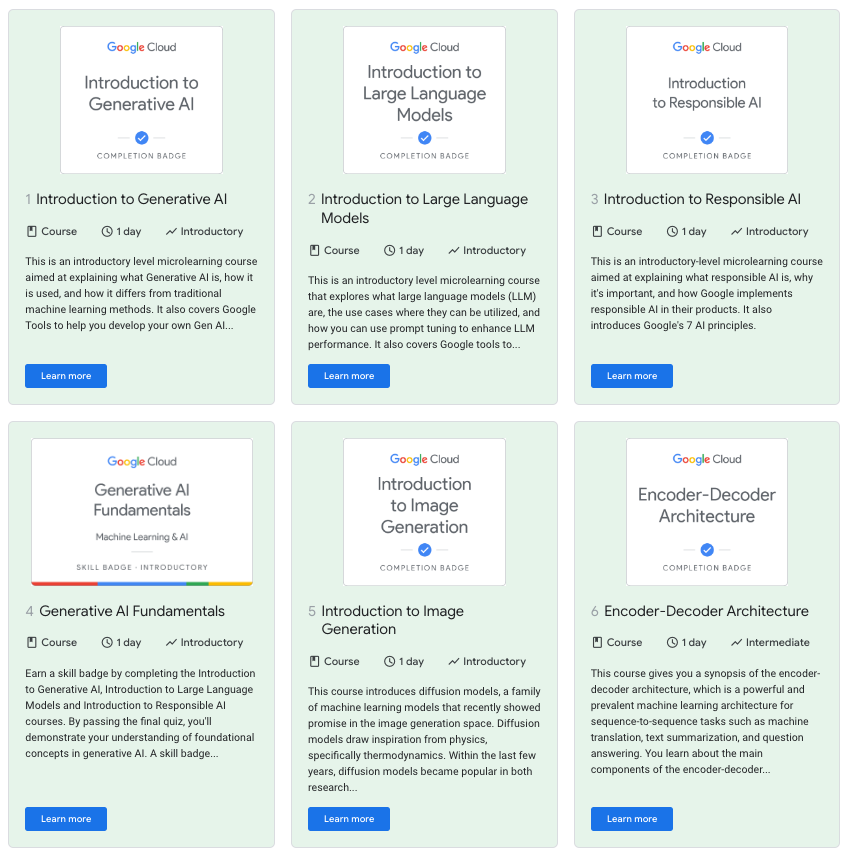

If you’re looking to upskill in Generative AI (GenAI), there’s a Generative AI Learning Path in Google Cloud Skills Boost. It currently consists of 10 courses and provides a good foundation on the theory behind Generative AI and what tools and services Google provides in GenAI. The best part is that it’s completely free!

As I went through these courses myself, I took notes, as I learn best when I write things down. In this part 1 of the blog series, I want to share my notes for courses 1 to 6, in case you want to quickly read summaries of these courses.

I highly recommend you go through the courses in full, each course is only 10-20 minutes long and they’re packed with more information than I present here.

Let’s get started.

1. Introduction to Generative AI

In this first course of the learning path, you learn about Generative AI, how it works, different GenAI model types and various tools Google provides for GenAI.

AI enables computer systems to be able to perform tasks normally requiring human intelligence.

ML is a subfield of AI and gives computers the ability to learn without explicitly programming.

ML has two flavors: Unsupervised and supervised learning where the data is labeled in the latter.

Deep learning is a subset of ML. Deep learning uses Artificial Neural Networks allowing them to capture complex patterns.

Generative AI (GenAI) is a subset of Deep Learning. It creates new content based on what it has learned from existing content. Learning from existing content is called training and results in the creation of a statistical model. When given a prompt, GenAI uses a statistical model to predict the response.

Large Language Models (LLMs) are also a subset of Deep Learning.

Deep Learning Model Types are classified into two: Discriminative and Generative.

- Discriminative is used to classify (eg. an image is predicted as dog).

- Generative generates new data similar to data it was trained on (eg. a dog image is generated from another dog image). Generative language models such as LaMDA, PaLM, GPT learn about patterns in language through training data and given some text, they predict what comes next.

The power of Generative AI comes from Transformer models. In Transformers, there’s an encoder and decoder.

Hallucinations are generated words/phrases that are nonsensical or grammatically incorrect. Usually happens when the model is not trained on enough data or trained on dirty data or maybe the model is not given enough context or constraints.

A foundation model is a large AI model pre-trained on a vast quantity of data, designed to be adapted and fine-tuned to a wide range of tasks such as sentiment analysis, image captioning, object recognition.

Vertex AI offers Model Garden for foundation models. For example, PaLM API and Bert for Language and Stable Diffusion for Vision.

GenAI Studio lets you quickly explore and customize GenAI models for your applications. It helps developers create and deploy GenAI models by providing a variety of tools and resources. There’s a library of pre-trained models, a tool for fine-tuning models, and a tool for deploying models to production.

GenAI App Builder creates Gen AI Apps without writing any code with its visual editor, drag-and-drop interface.

PaLM API lets you test and experiment with Google’s LLMs. Provide developer access to models optimized for different use cases.

MakerSuite is an approachable way to start prototyping and building generative AI applications. Iterate on prompts, augment your dataset, tune custom models.

2. Introduction to Large Language Models

In this course, you learn about Large Language Models (LLMs) and explain how they can be tuned for different use cases.

LLMs are a subset of Deep Learning.

LLMs are large, general purpose language models that can be pre-trained and then fine-tuned for specific purposes.

Benefits of using LLMs:

- A single model can be used for different tasks.

- The fine-tuning process requires minimal field data.

- The performance is continuously growing.

Pathways Language Model (PaLM) is an example of LLM and a transformer model with 540 billion parameters.

A transformer model consists of encoder and decoder. The encoder encodes the input sequence and passes to the decoder. The decoder learns to decode the representations for a relevant task.

LLM development vs. traditional ML development:

- In LLM development, when using pre-trained APIs, no ML expertise needed, no training examples, no need to train a model. All you need to think about prompt design.

- In traditional ML development, ML expertise needed, training examples needed, training a model needed with compute time and hardware.

There are 3 kinds of LLM:

- Generic language: Predict the next word basen on the language in the training data.

- Instruction tuned: Trained to predict a response to the instructions given in the input.

- Dialog tuned: Trained to have a dialog by predicting the next response.

VertexAI’s Model Garden provides task specific foundation models. e.g. Language sentiment analysis, vision occupancy analysis.

Tuning is the process of adapting a model to a new domain (eg. legal or medical domain) by training the model on new data.

Fine tuning is bringing your own dataset and retrain the model by tuning every weight in LLM. This requires a big training job and hosting your own fine-tuned model. Very expensive.

Parameter-efficient tuning methods (PETM) is a method for tuning LLM on your custom data without duplicating the model. The base model is not altered, instead small add-on layers are tuned.

3. Introduction to Responsible AI

In this course, you learn about Google’s AI principles:

- AI should be socially beneficial.

- AI should avoid creating or reinforcing unfair bias.

- AI should be built and tested for safety.

- AI should be accountable to people.

- AI should incorporate privacy design principles.

- AI should uphold high standards of scientific excellence.

- AI should be made available for uses that accord with these principles.

Additionally, Google will not design or deploy AI in 4 areas:

- Technologies that cause or are likely to cause overall harm.

- Weapons or other technologies whose principal purpose or implementation is to cause or directly facilitate injury to people.

- Technologies that gather or use information for surveillance that violates internationally accepted norms.

- Technologies whose purpose contravenes widely accepted principles of international law and human rights.

4. Generative AI Fundamentals

This is just a placeholder course with a quiz to make sure you complete the first 3 courses.

5. Introduction to Image Generation

The course is mainly about diffusion models, a family of models that have recently shown tremendous promise in the image generation space.

Image Generation Model Families:

- Variational Autoencoders (VAEs): Encode images to a compressed size, then decode back to original size, while learning the distribution of the data.

- Generative Adversarial Models (GANs): Pit two neural networks against each other.

- Autoregressive Models: Generate images by treating an image as a sequence of pixels.

Diffusion models: New trend of generative models.

- Unconditioned generation: Models have no additional input or instruction. They can train on images of faces and generate new faces from that or improve the resolution of images.

- Conditioned generation: These give things like text-to-image (Mona lisa with cat face), or image-inpainting (Remove the woman from the image) or text-guided image-to-image (disco dancer with colorful lights).

The essential idea of diffusion models is to systematically and slowly destroy structure in a data distribution through an iterative forward diffusion process and then learn a reverse diffusion process that restores the structure in data, yielding a highly flexible and tractable generative model of the data.

6. Encoder-Decoder Architecture

In this course, you learn about the Encoder-Decoder architecture that is at the core of LLMs.

Encoder-Decoder is a sequence-to-sequence architecture: it takes a sequence of words as input and outputs another sequence.

Typically, it has 2 stages:

- An encoder stage that produces a vector representation of the input sentence.

- A decoder stage that creates the sequence output.

To train a model, you need a dataset, a collection of input/output pairs. You can then feed this dataset to the model, which corrects its weights during training based on the error it produces on a given input in the dataset.

A subtle point is that the decoder generates at each step the probability that each token in your vocabulary is the next one. Using these probabilities, you choose a word.

There are several approaches to selecting a word. The simplest one, the greedy search, is to generate the token that has the highest probability. A better approach is called beam search where the decoder evaluates the probability of sentence chunks, rather than individual words.

In LLMs, the simple RNN network within the encoder-decoder is replaced by transformer blocks which are based on the attention mechanism.

There’s also a hands-on Encoder-Decoder Architecture: Lab Walkthrough that accompanies the course.

Hope you found these notes useful. In the next post, I will go through my notes for courses 7 to 10. If you have questions or feedback, feel free to reach out to me on Twitter @meteatamel.