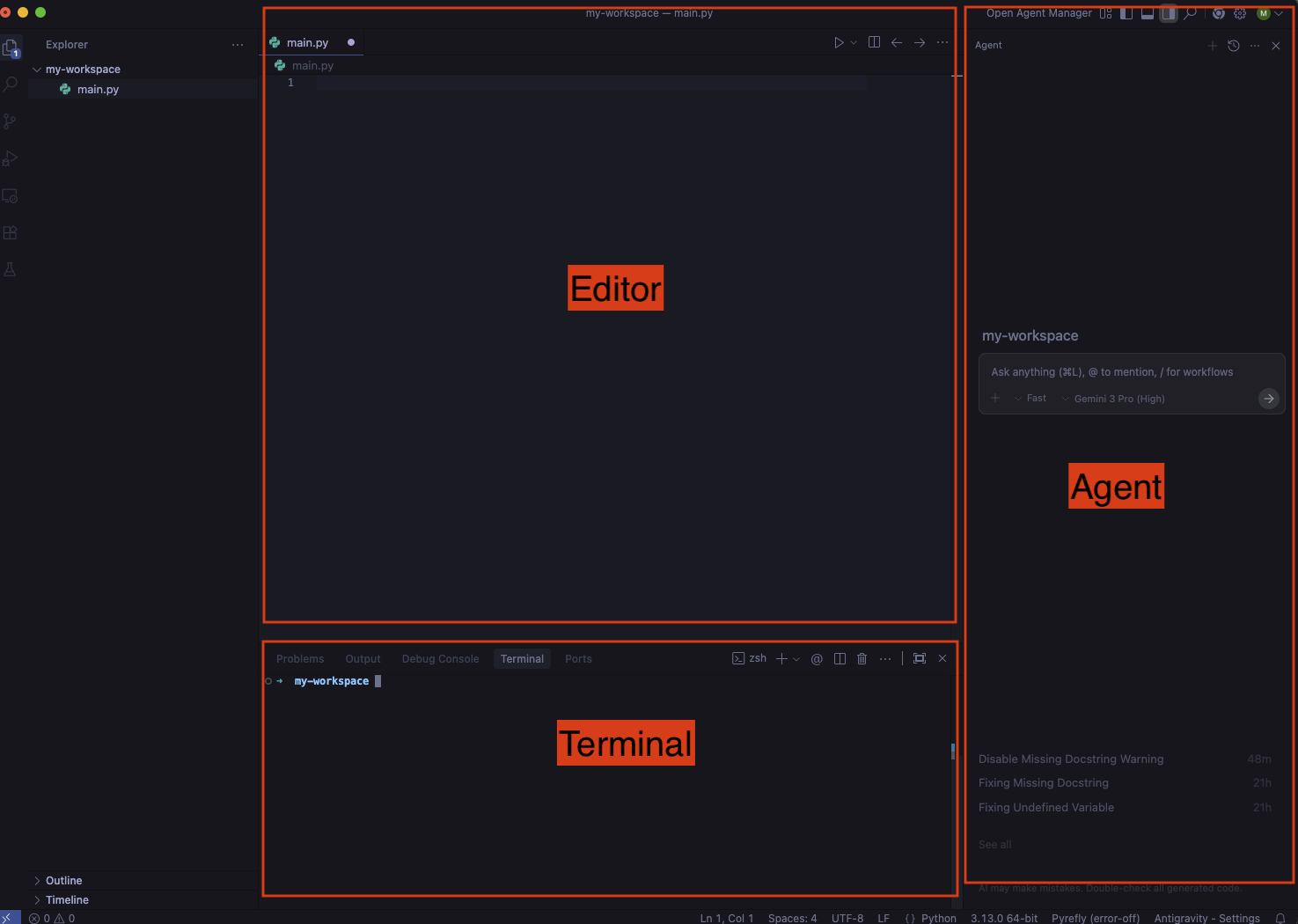

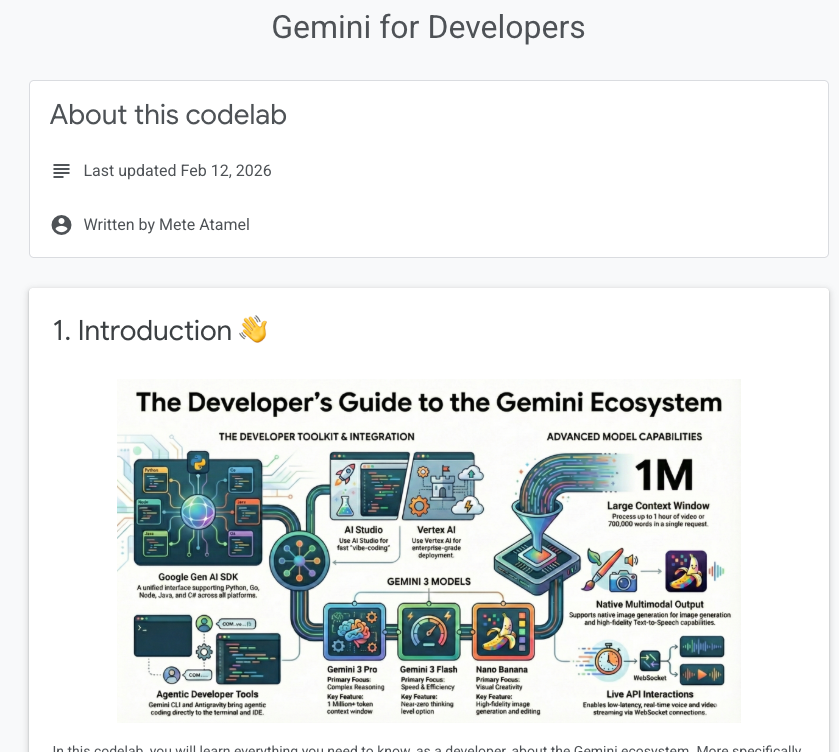

The Gemini ecosystem has evolved into a comprehensive suite of models, tools, and APIs. Whether you are “vibe-coding” a web app or deploying enterprise-grade agents, navigating the options can be overwhelming.

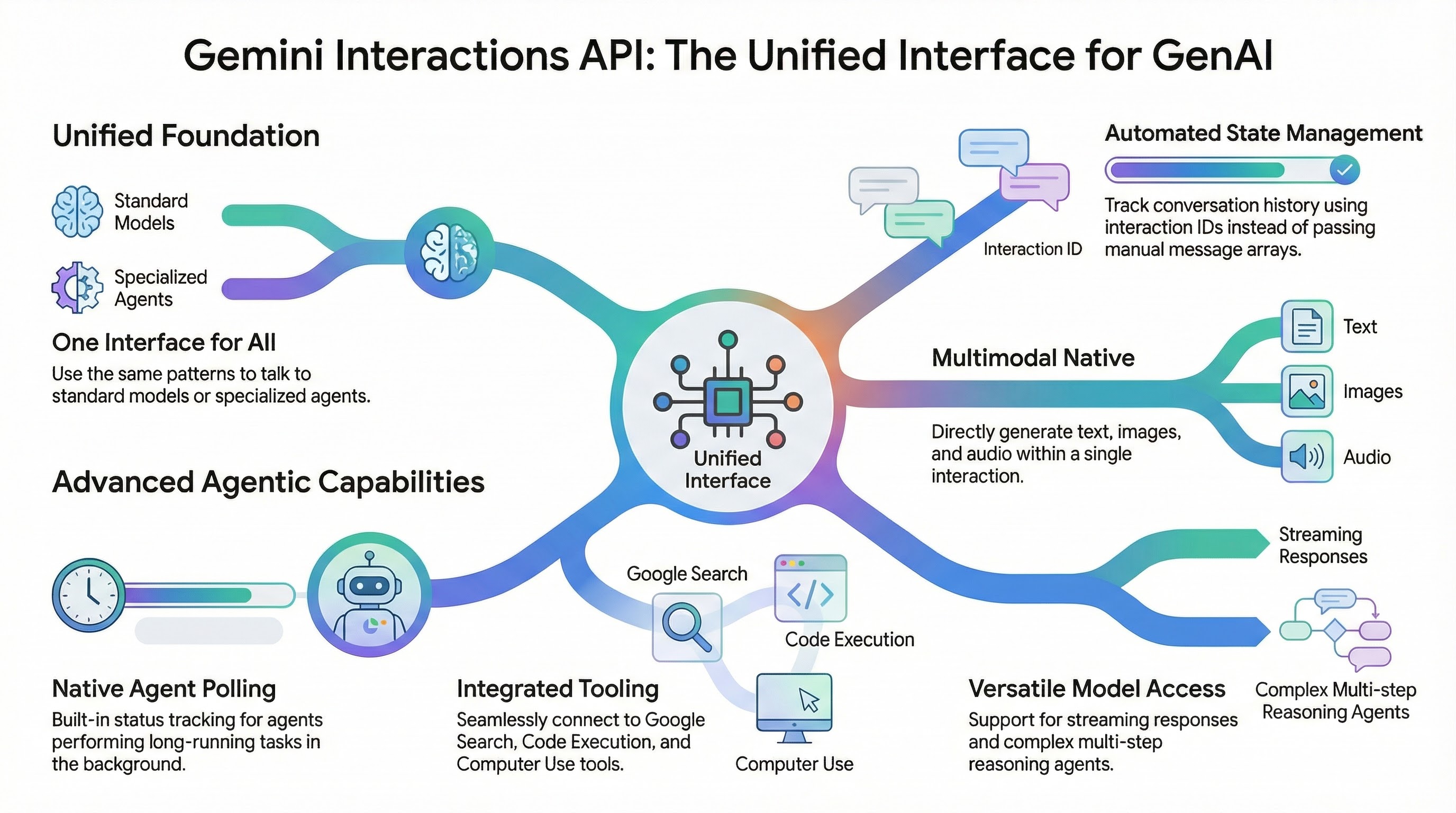

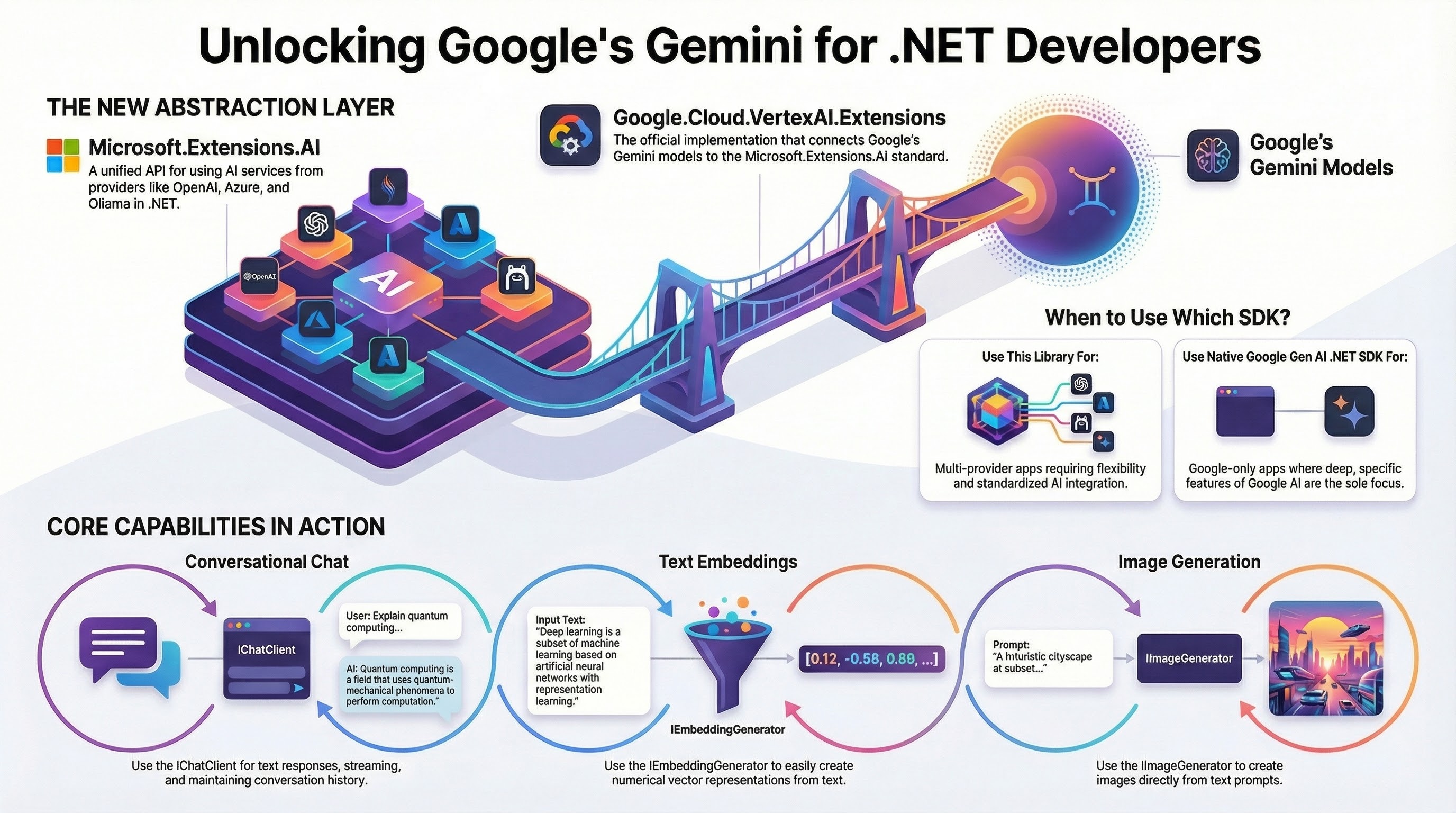

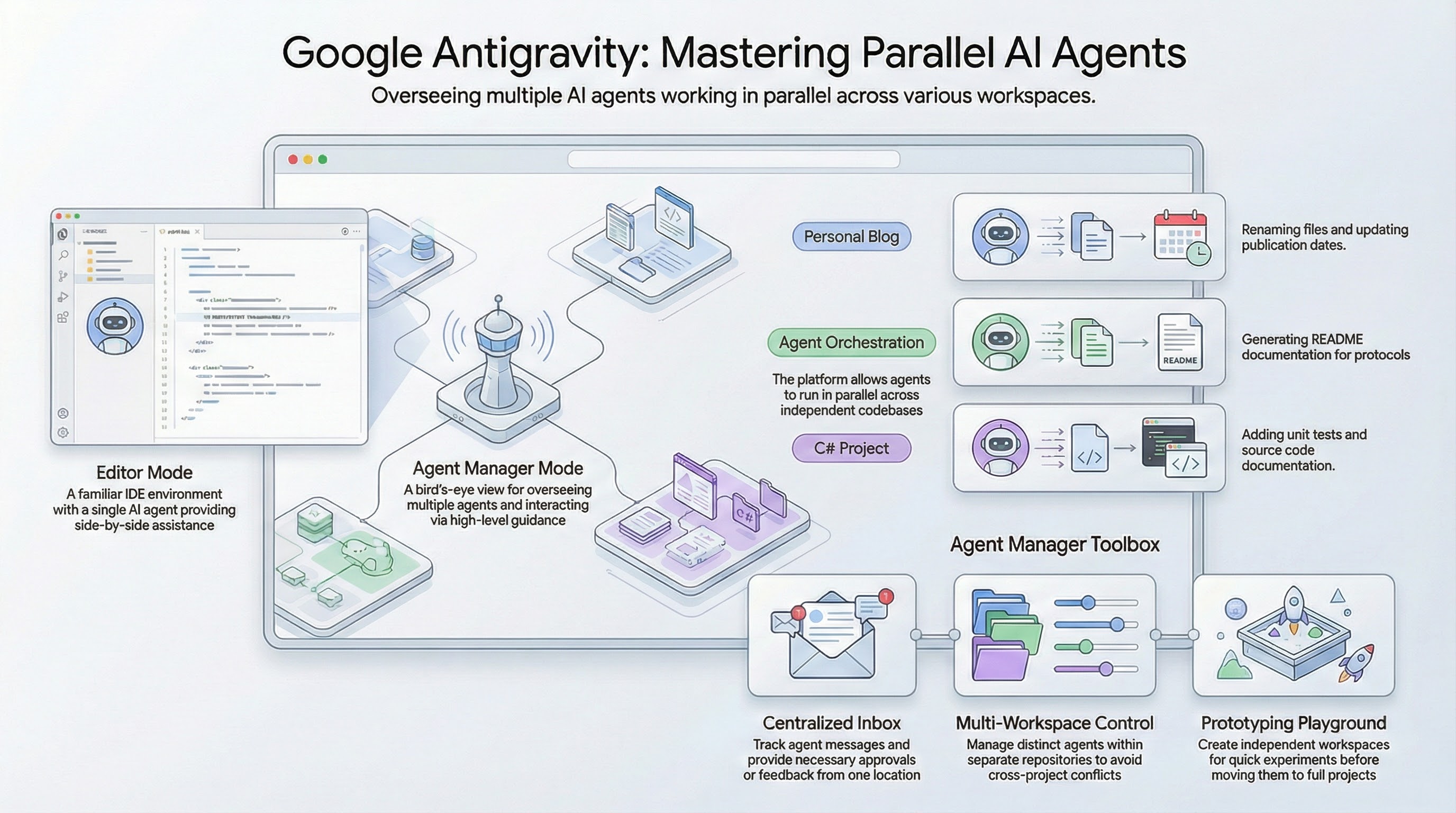

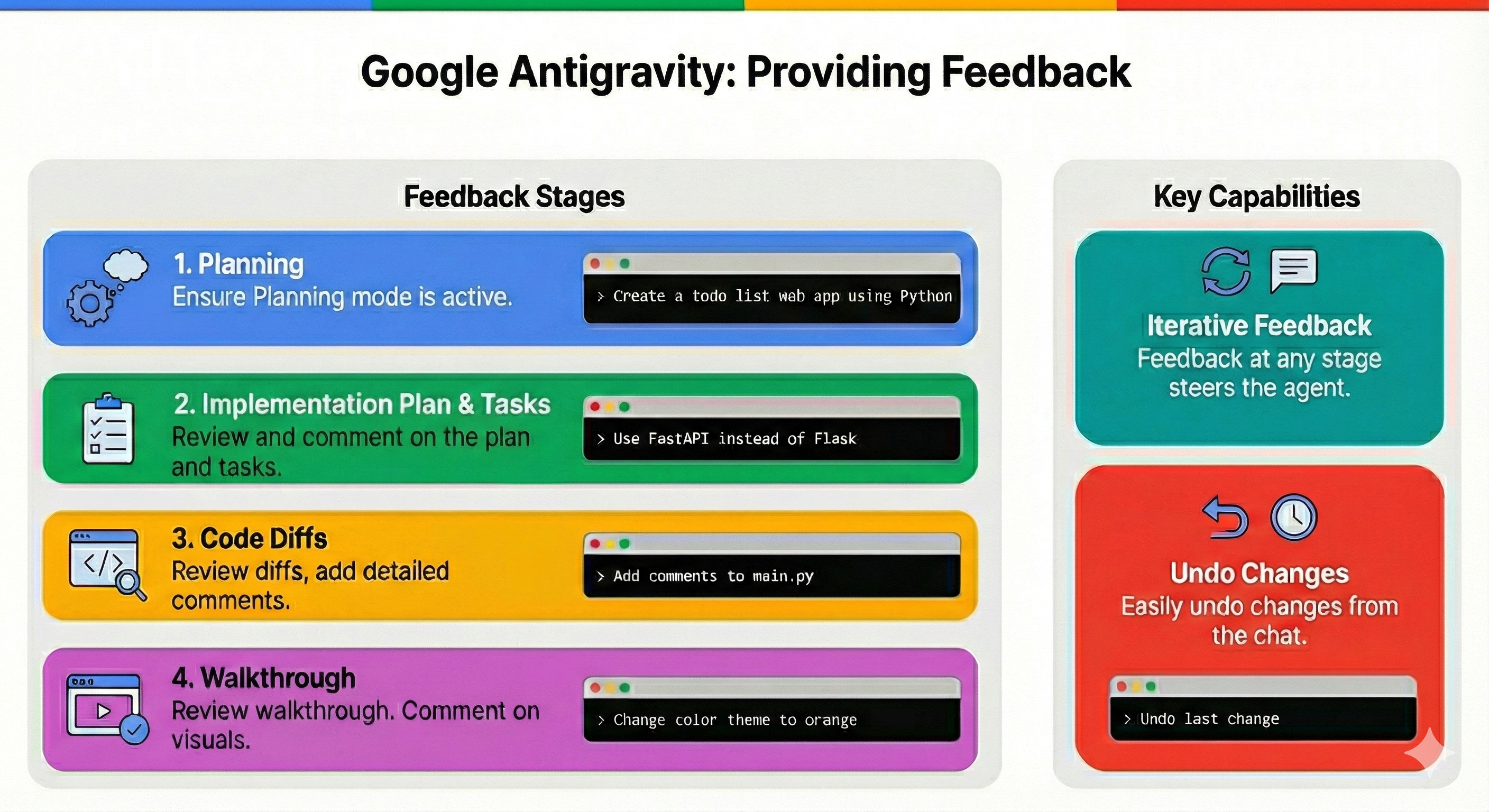

I am happy to announce a new Gemini for Developers codelab. This codelab is designed to teach you everything you need to know about the Gemini ecosystem, from the different model flavors to tools powered by Gemini to integration using the Google Gen AI SDK and the new Interactions API.

Read More →