In part 1, I talked about the initial app and its challenges. In part 2, I talked about the lift & shift to the cloud with some unexpected benefits. In this part 3 of the series, I’ll talk about how we transformed our Windows-only .NET Framework app to a containerized multi-platform .NET Core app and the huge benefits we got along the way.

Why?

The initial Windows VM based cloud setup served us well with minimal issues about roughly 2 years (from early 2017 to early 2019). In early 2019, we wanted to revisit the architecture again. This was mainly driven by the advances in the tech scene namely:

- .NET Core, the modular and modern version of .NET Framework, was becoming very popular. It’s something we had to look into as the next generation of our app.

- Windows: Our dependency on Windows severely limited where we could deploy our app. We wanted to break this dependency to unlock more deployment options.

- Containers and the projects around them like Kubernetes also got very popular. We wanted to see if our app could be containerized and possibly deployed to GKE.

- Costs: We wanted to avoid the cost of Windows licensing and having 2 VMs running at all times on Compute Engine.

.NET Core

Our first priority was to see whether the app could run on .NET Core. I initially reserved a weekend to investigate and port the app to .NET Core. I was expecting that it’d require a major re-write in many places.

I started investigating on a Saturday morning and by lunch time, I had the basics of the app running on .NET Core 2.2. I was surprised that it only took half a day. Microsoft had excellent documentation on porting (eg. Overview of porting from .NET Framework to .NET Core) and tools like Portability Analyzer to see if your app can be ported and dotnet try-convert tool to actually convert the app.

Instead of relying on tools, we simply copied/pasted our projects into new .NET Core projects and made them work one by one. This mainly involved following the .NET Core style of things, finding the new .NET Core version of libraries on NuGet and finally porting the tests to make sure everything works. We were lucky that the app was simple, relatively modular with good test coverage and all the NuGet dependencies already had .NET Core versions.

Containerization (Linux vs. Windows)

Our main motivation for .NET Core porting was to be able to run the app in a Linux container on Docker. If we did that, that would not only break our dependency to Windows but it would also enable us to deploy the app beyond Windows Server VMs.

Containerization has its own challenges, especially if you’re not too familiar with the intricacies of Docker (here’s an example) but it was relatively straightforward for our app following Microsoft’s base images and documentation.

A note on Windows containers. At the time, there were Windows containers but not much support for them on Google Cloud. That’s why we thought it was a good idea to try porting to .NET Core and make the app work on Linux. In cases where this is not possible due to some Windows dependency, Windows containers are now supported on Google Kubernetes Engine (GKE). It is a viable option to start getting the benefits of containers without a major re-write.

App Engine vs. Google Kubernetes Engine (GKE)

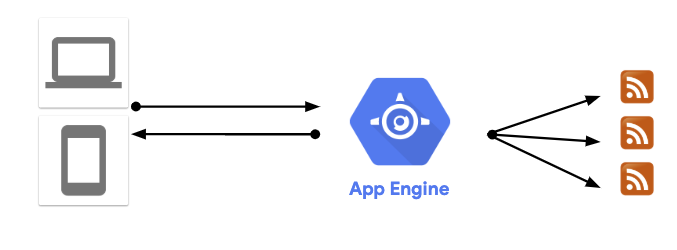

Once our app was running in a container, it was time to move away from Windows Server VMs to a Linux and container friendly environment. We had 2 main choices: App Engine (Flex) and GKE. In the end, we decided to go with App Engine mainly because:

- Kubernetes complexity: Kubernetes is great if you have many microservices with different requirements and you want to manage them individually in a fine-grained way. We didn’t feel like Kubernetes complexity was justified in our single monolith app.

- App Engine features: It was a single command to deploy to App Engine, got 2 instances by default, autoscaling without any configuration, and nice useful features like revisions and traffic splitting.

We were not only free from Windows (finally!), but we also kept the redundancy/load-balancing features of Compute Engine but with much less hassle on App Engine.

On the flip side, App Engine based solution was sub-optimal in some ways:

- VM based: App Engine (Flex) apps still run on Virtual Machines. Sure, you don’t have to see or manage those VMs most of the time and it’s nice that they’re Linux based but you can’t totally ignore them like a truly serverless platform

- Pricing: Since you run on VMs, you’re billed like VMs (per second) even if your app is not being used.

- Slow deployments: Custom image deploys were slow enough for us (up to 10 minutes) to affect our development cycle.

Lessons Learned

There were many changes and lessons learned in this phase of the app:

- Refactor for clear benefits: We didn’t refactor the app for almost 2 years because there was no clear benefit. It’s only after .NET Core became mainstream and multi-platform and containerization offered better and cheaper deployment options that the benefits outweighed the dependency on Windows.

- Solid functional tests is crucial: Our port to .NET Core was successful because we had solid functional tests to rely on. Without them, I don’t think we’d push through the major refactoring with such confidence.

- Project organization is more important than you think: How you structure your solution, your projects, your libraries, your packages makes a big difference when you need to refactor. Because we had nicely organized projects with clear dependencies, we were able to port them one by one to .NET Core.

- There’s no magic bullet: Deploying to App Engine (Flex) solved our main problem (Windows dependency) but it didn’t solve everything (VM based pricing). It also introduced other problems (slow deployments). Important to remember that there’s no perfect solution and it’s all about compromises.

Next

Removing the Windows dependency was a major accomplishment of this phase of our app. However, our app was still a single monolith that ran on VMs. In the next and final post of the series, I’ll talk about how we moved to a serverless microservices architecture with Cloud Run.