Pic-a-Daily Serverless Workshop

As you might know, Guillaume Larforge and I have a Pic-a-Daily Serverless Workshop. In this workshop, we build a picture sharing application using Google Cloud serverless technologies such as Cloud Functions, App Engine, Cloud Run and more.

We recently added a new service to the workshop. In this blog post, I want to talk about the new service. I also want to talk about Eventarc and how it helped us to get events to the new service.

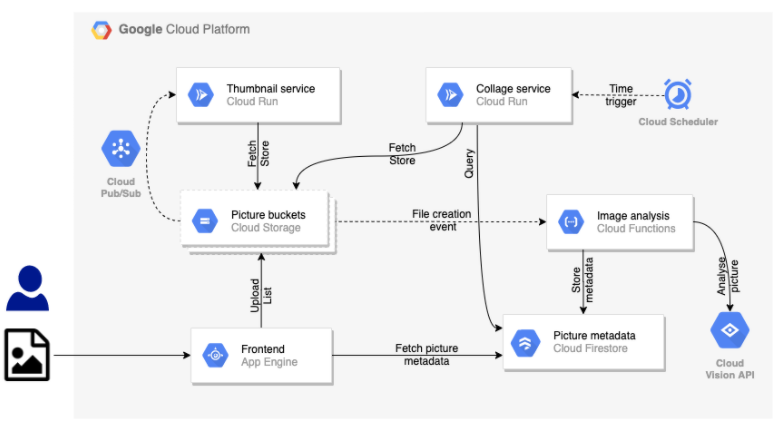

Previous architecture

This was the previous architecture of Pic-a-Daily:

To recap:

- A user uploads a picture to the pictures bucket via a frontend.

- Image analysis Cloud Function receives the upload event, extracts the metadata of the image and saves to Firestore.

- Thumbnail service receives the upload event via Pub/Sub, resizes the image and saves to the thumbnails bucket.

- Collage service creates a collage of thumbnail pictures.

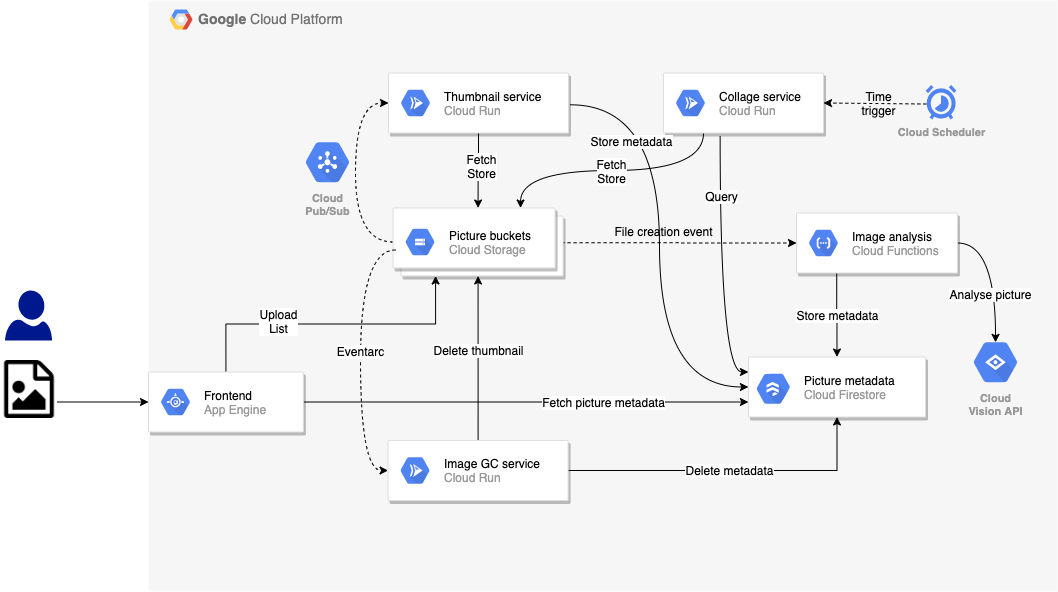

Image garbage collector service

One problem of the previous architecture was around image deletions. When an image was deleted from the pictures bucket, the thumbnail of the image in thumbnails bucket and the metadata of the image in Firestore lingered around.

To fix this problem, we created a new Cloud Run service, image garbage collector, to clean up after an image is deleted.

To get Cloud Storage events to image garbage collector, we could have used Pub/Sub pushed messages, as we did in thumbnails service. However, we decided to use Eventarc instead.

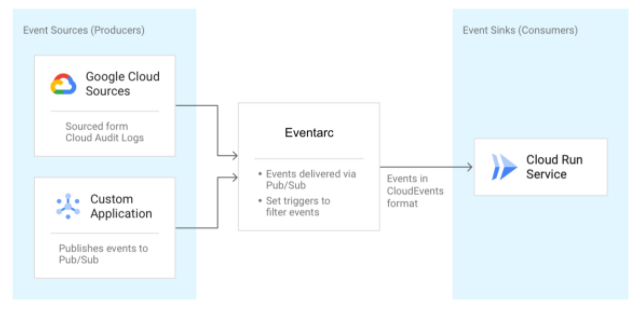

What is Eventarc

We announced Eventarc back in October as a new eventing functionality that allows you to send events to Cloud Run from more than 60 Google Cloud sources. This is done by reading AuditLogs from various sources and sending them to Cloud Run services as CloudEvents. There is also the option of reading events from Pub/Sub topics for custom applications.

We decided to try Eventarc as a new way to get events. We also wanted to avoid all the setup we had to do in thumbnail service to get Cloud Storage events to to Cloud Run via Pub/Sub. See details.

Current architecture

The current architecture now looks like this with Eventarc and image garbage collector:

Image garbage collector code

You can check out the full code for image garbage collector here. I’ll highlight some parts of the code.

Image garbage collector receives AuditLogs wrapped into CloudEvents from Eventarc. It first reads the CloudEvent using the CloudEvents SDK:

const { HTTP } = require("cloudevents");

...

const cloudEvent = HTTP.toEvent({ headers: req.headers, body: req.body });

The data field of the CloudEvent has the actual AuditLog. There’s

Google Events library to parse

the data into an AuditLog:

const {toLogEntryData} = require('@google/events/cloud/audit/v1/LogEntryData');

...

const logEntryData = toLogEntryData(cloudEvent.data);

The rest of the code is to extract bucket and object name from the event and then delete it from thumbnails bucket and Firestore collection:

const tokens = logEntryData.protoPayload.resourceName.split('/');

const bucket = tokens[3];

const objectName = tokens[5];

// Delete from thumbnails

try {

await storage.bucket(bucketThumbnails).file(objectName).delete();

console.log(`Deleted '${objectName}' from bucket '${bucketThumbnails}'.`);

}

catch(err) {

console.log(`Failed to delete '${objectName}' from bucket '${bucketThumbnails}': ${err}.`);

}

// Delete from Firestore

try {

const pictureStore = new Firestore().collection('pictures');

const docRef = pictureStore.doc(objectName);

await docRef.delete();

console.log(`Deleted '${objectName}' from Firestore collection 'pictures'`);

}

catch(err) {

console.log(`Failed to delete '${objectName}' from Firestore: ${err}.`);

}

Create a Trigger

Lastly, we need to connect events from Cloud Storage to the new service via Eventarc. This is done with a trigger:

gcloud beta eventarc triggers create trigger-${SERVICE_NAME} \

--destination-run-service=${SERVICE_NAME} \

--destination-run-region=europe-west1 \

--matching-criteria="type=google.cloud.audit.log.v1.written" \

--matching-criteria="serviceName=storage.googleapis.com" \

--matching-criteria="methodName=storage.objects.delete" \

--service-account=${PROJECT_NUMBER}-compute@developer.gserviceaccount.com

Notice that we’re matching for AuditLogs for Cloud Storage and specifically

looking for storage.objects.delete events.

If you want to try out our workshop and the new lab, here are some links:

- Pic-a-Daily Serverless Workshop (g.co/codelabs/serverless-workshop)

- Pic-a-daily: Lab 5—Image garbage collector

- Code for the workshop

Feel free to reach out to me on Twitter @meteatamel for any questions/feedback.