Introduction

In my previous Vertex AI’s Grounding with Google Search: how to use it and why post, I explained why you need grounding with large language models (LLMs) and how Vertex AI’s grounding with Google Search can help to ground LLMs with public up-to-date data.

That’s great but you sometimes need to ground LLMs with your own private data. How can you do that? There are many ways but Vertex AI Search is the easiest way and that’s what I want to talk about today with a simple use case.

Cymbal Starlight 2024

For the use case, imagine you own the 2024 model of a fictitious vehicle called Cymbal Starlight. It has a user’s manual in PDF format (cymbal-starlight-2024.pdf) and you want to ask LLM questions about this vehicle from the manual.

Since LLMs are not trained with this user manual, they’ll likely not be able to answer any questions about the vehicle but let’s see.

Without Grounding

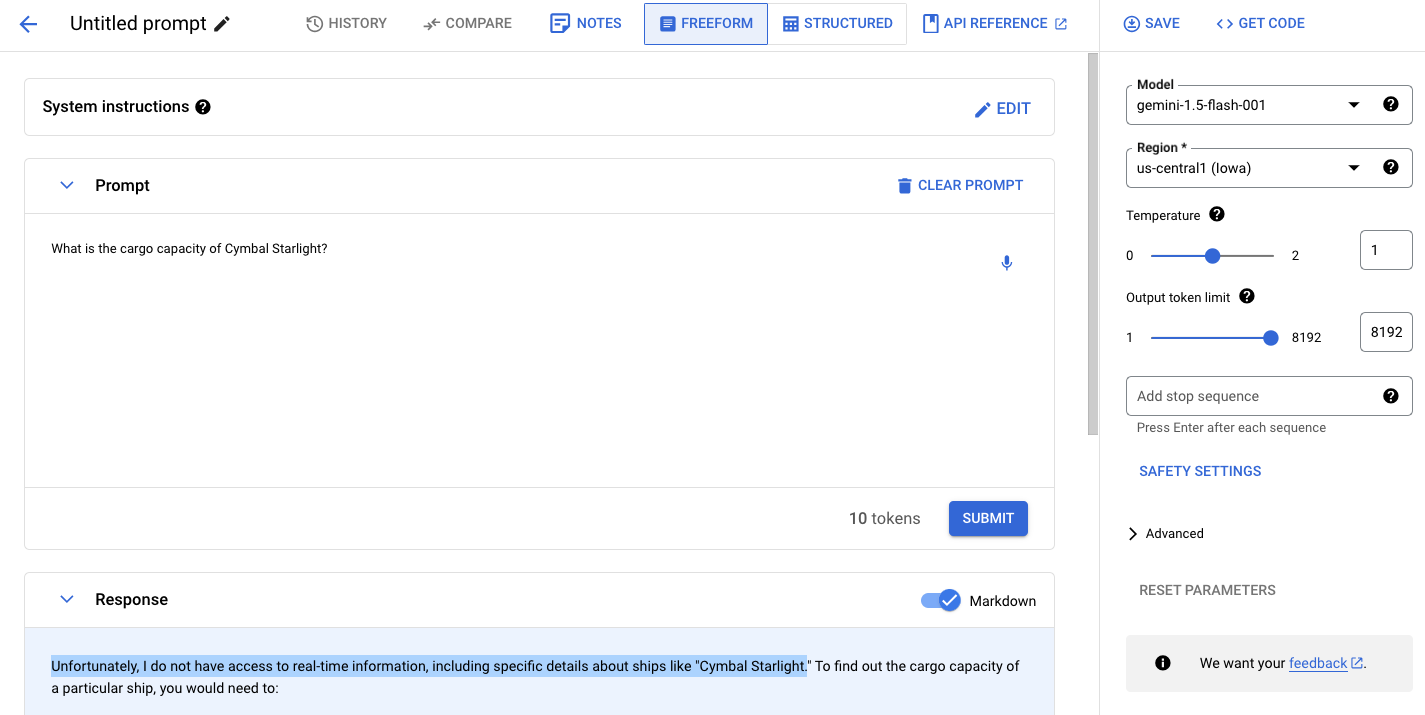

First, let’s ask a question to the LLM about the vehicle without any grounding. You can do this in the Vertex AI section of Google Cloud Console against any model.

Prompt: What is the cargo capacity of Cymbal Starlight?

Response: Unfortunately, I do not have access to real-time information,

including specific details about ships like "Cymbal Starlight"

...

As you can see, the model thinks we’re talking about a ship and cannot really answer the question.

There’s also a main.py sample code that you can run:

python main.py --project_id your-project-id

You get a similar response:

Prompt: What is the cargo capacity of Cymbal Starlight?

Response text: I do not have access to real-time information,

including specific details about ships like the "Cymbal Starlight."

This is not surprising. Let’s now ground the LLM with this private PDF.

Create datastore with PDF

To use a PDF for grounding, you need to upload the PDF to a Cloud Storage bucket and set up a datastore to import from that bucket.

Create a Cloud Storage bucket with uniform bucket-level access and upload the PDF file:

gsutil mb -b on gs://cymbal-starlight-bucket

gsutil cp cymbal-starlight-2024.pdf gs://cymbal-starlight-bucket

Go to the Agent Builder section of the Google Cloud Console. Click on Data Stores on the left and Create Data Store button. Select Cloud Storage.

Point to the PDF file in the bucket and continue:

Give your datastore a name and click Create:

You need to wait until the import is complete which can take up to 10-15 minutes:

Create a search app

Before you can use grounding, we need to create a search app and point to the datastore you just created.

Go to Agent Builder section of the Google Cloud Console and click on Create App button:

- Select

Searchapp type. - For Content, select

Generic. - Make sure

Enterprise edition featuresis enabled - Give your app a name and enter company info.

In the next page, choose the data store you created earlier and click Create:

Setup grounding with Vertex AI Search

Caution: Make sure the datastore import is complete before you continue. If you get an error message: “Cannot use enterprise edition features” or something similar, you might need to wait a little before trying again.

Let’s set up grounding with Vertex AI Search now.

Go back to Vertex AI section of Google Cloud Console and in the Advanced section, select Enable grounding:

Customize grounding and point to the datastore you just created:

This is the format of the datastore string:

projects/{}/locations/{}/collections/{}/dataStores/{}

In my case, it’s as follows:

projects/genai-atamel/locations/global/collections/default_collection/dataStores/cymbal-datastore_1718710076267

With grounding

Finally, we’re ready to ask questions about the vehicle with grounding enabled.

Let’s start with the previous question:

Prompt: What is the cargo capacity of Cymbal Starlight?

Response: The Cymbal Starlight 2024 has a cargo capacity of 13.5 cubic feet.

Let’s ask another question:

Prompt: What's the emergency roadside assistance phone number?

Response: 1-800-555-1212

Also run main.py Python sample with grounding:

python main.py --project_id your-project-id \

--data_store_path projects/your-project-id/locations/global/collections/default_collection/dataStores/your-datastore-id

You get a similar response:

Prompt: What is the cargo capacity of Cymbal Starlight?

Response text: The Cymbal Starlight 2024 has a cargo capacity of 13.5 cubic feet.

It works!

Conclusion

In this blog post, I explored Vertex AI’s grounding with Vertex AI Search. It’s the easiest way of grounding LLMs with your own private data. If you want to learn more, here are some more resources:

- Get started with generic search docs

- Grounding decision flowchart

- Getting Started with Grounding with Gemini in Vertex AI notebook

- Grounding for Gemini with Vertex AI Search and DIY RAG talk

- Fix My Car sample app