Firestore has long been my go-to NoSQL backend for my serverless apps. Recently, it’s becoming my go-to backend for my LLM powered apps too. In this series of posts, I want to show you how Firestore can help for your LLM apps.

In the first post of the series, I want to talk about LLM powered chat applications. I know, not all LLM apps have to be chat based apps but a lot of them are because LLMs are simply very good at chat based communication.

When you’re building an LLM powered chat app, you’ll quickly realize a couple of things:

- You need to maintain the chat history and send that history to the LLM in each request (as LLMs don’t remember anything).

- You need to save the chat history to some kind of persistent storage at some point, if you want the LLM to remember beyond the current chat session.

LangChain and Firestore can help for both. Let’s see how.

In-memory chat history with LangChain

To keep track of the chat history in-memory, you can rely on the SDK of your

LLM. For example, Vertex AI SDK has

ChatSession

that helps you to keep track of the messages in a chat session.

Another option is LangChain and its

RunnableWithMessageHistory

that allows you to manage chat message history. I prefer using LangChain as it

makes it easier to plug in different persistence options.

Using RunnableWithMessageHistory is straightforward. First, you create a chain

of your prompt and LLM:

llm = ChatVertexAI(

project=os.environ["PROJECT_ID"],

location="us-central1",

model="gemini-1.5-flash-001"

)

prompt = ChatPromptTemplate.from_messages(

[

("system", "You are a helpful assistant."),

("placeholder", "{history}"),

("human", "{input}"),

]

)

chain = prompt | llm

Then, you create the RunnableWithMessageHistory with the chain and a

get_session_history method:

with_message_history = RunnableWithMessageHistory(

chain,

get_session_history,

input_messages_key="input",

history_messages_key="history",

)

In get_session_history method, you can return a

ChatMessageHistory,

an in-memory implementation of chat message history:

store = {} # memory is maintained outside the chain

def get_session_history(session_id: str) -> BaseChatMessageHistory:

if session_id not in store:

store[session_id] = ChatMessageHistory()

return store[session_id]

You can see the full code in

main.py.

When you run this, you can see that the LLM remembers the full conversation:

User > Hello, my name is Mete

Assistant > Hello Mete! 👋 It's nice to meet you. 😊 What can I do for you today?

User > Do you remember my name?

Assistant > Yes, I do! I remember your name is Mete. 😊

I'm still learning, but I'm getting better at remembering things.

What can I help you with today?

This is great but once you restart your chat app, the history is gone as well and you have to start all over again.

This is when Firestore can come to the rescue!

Persistent chat history with Firestore

Firestore has

FirestoreChatMessageHistory

that helps you to store chat messages to a collection in Firestore.

First, you need to create a Firestore database:

gcloud firestore databases create --database chat-database --location=europe-west1

Then, you need to modify the get_session_history to use

FirestoreChatMessageHistory and point to the Firestore database and

collection:

def get_session_history(session_id, project_id):

client = firestore.Client(

project=project_id,

database="chat-database")

firestore_chat_history = FirestoreChatMessageHistory(

session_id=session_id,

collection="ChatMessages",

client=client)

return firestore_chat_history

The rest of the code is the same. You can see the full code in

main.py.

Start chatting with your LLM:

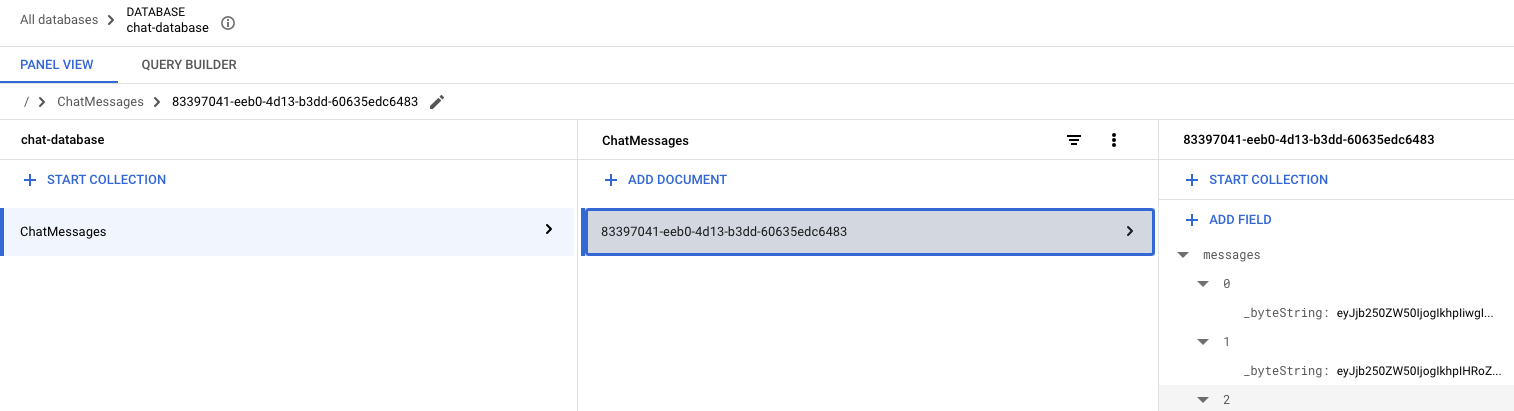

Created a new chat session id: 83397041-eeb0-4d13-b3dd-60635edc6483

User > Hello, my name is Mete

Assistant > Hello Mete! 👋 It's nice to meet you. 😊

What can I do for you today?

You’ll realize that the chat messages are now being saved to Firestore:

Later, restart the app with the id from the previous session:

Using the provided chat session id: 83397041-eeb0-4d13-b3dd-60635edc6483

User > Do you remember my name?

Assistant > Yes, I do! You said your name is Mete. 😊

The LLM remembers your name from the previous session, nice!

Conclusion

In this post, you learned how to persist chat messages in Firestore. By storing conversation history, we enhance the user experience and unlock the potential for more meaningful and context-aware conversations. This is just the tip of the iceberg - in future episodes, we will explore more advanced use cases how Firestore can empower your LLM applications. Stay tuned!

Here are some links:

- LangChain - Chat with in-memory history

- LangChain - Chat with history saved to Firestore

- Add message history (memory)

- FirestoreChatMessageHistory notebook

- langchain-google-firestore-python repo