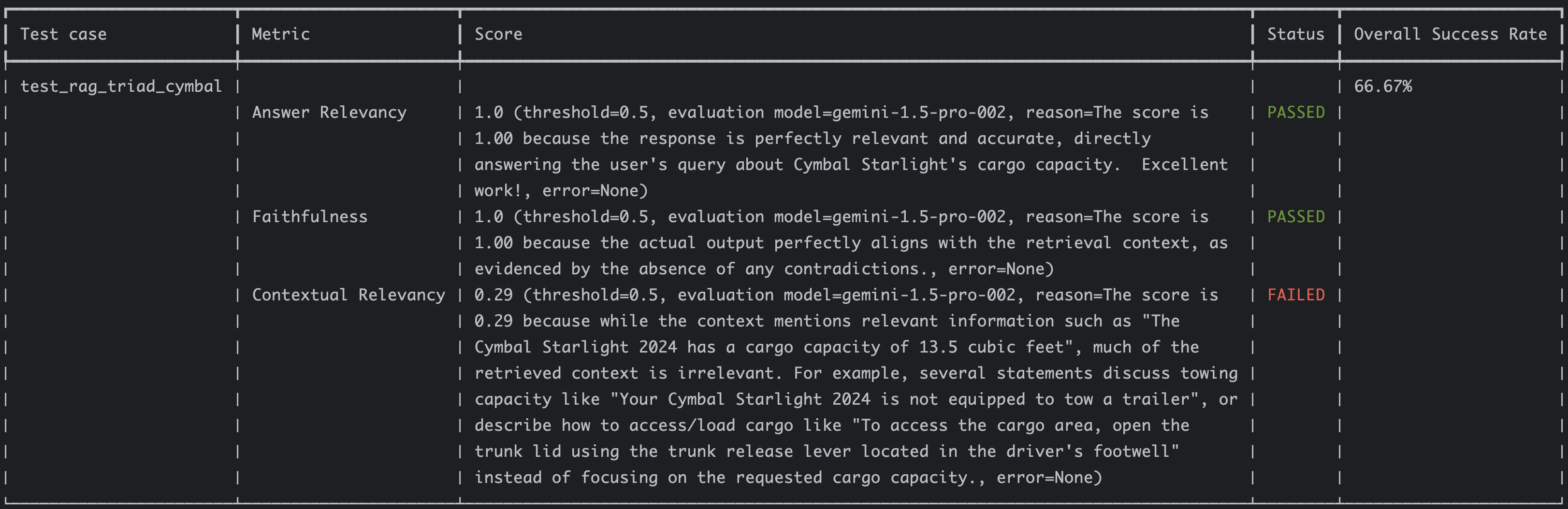

In my Gen AI Evaluation Service - An Overview post, I introduced Vertex AI’s Gen AI evaluation service and talked about the various classes of metrics it supports. In today’s post, I want to dive into computation-based metrics, what they provide, and discuss their limitations.

Computation-based metrics are metrics that can be calculated using a mathematical formula. They’re deterministic – the same input produces the same score, unlike model-based metrics where you might get slightly different scores for the same input.

Read More →